Momentum - Last Iterate Convergence and Variance Reduction

Abstract

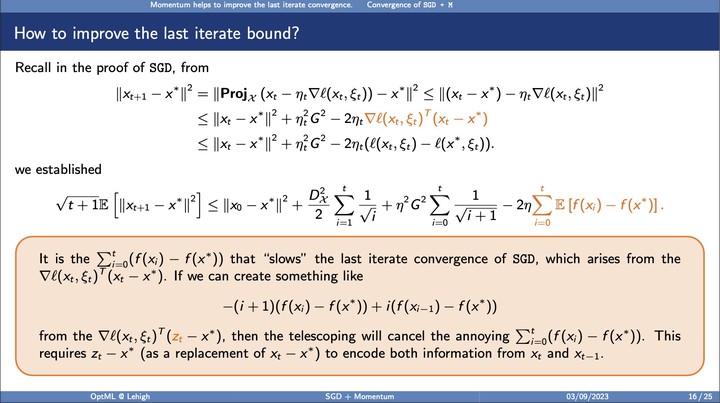

Stochastic gradient descent with momentum (SGD + M) is very popular, yet its convergence property is not well understood. Only recently, the work a proved that stochastic heavy-ball’s (SGD + H) convergence speed is not faster than that of SGD alone (convex case). In this talk, we review some literature on this topic and results. It seems that the advantage of SGD + M over SGD in convex case is to improve the last iterate convergence.

Date

Feb 11, 2023 6:15 PM — Feb 11, 2022 8:15 PM

Event

OptML@Lehigh 2023 Spring

Location

Bethlehem, PA, United States